|

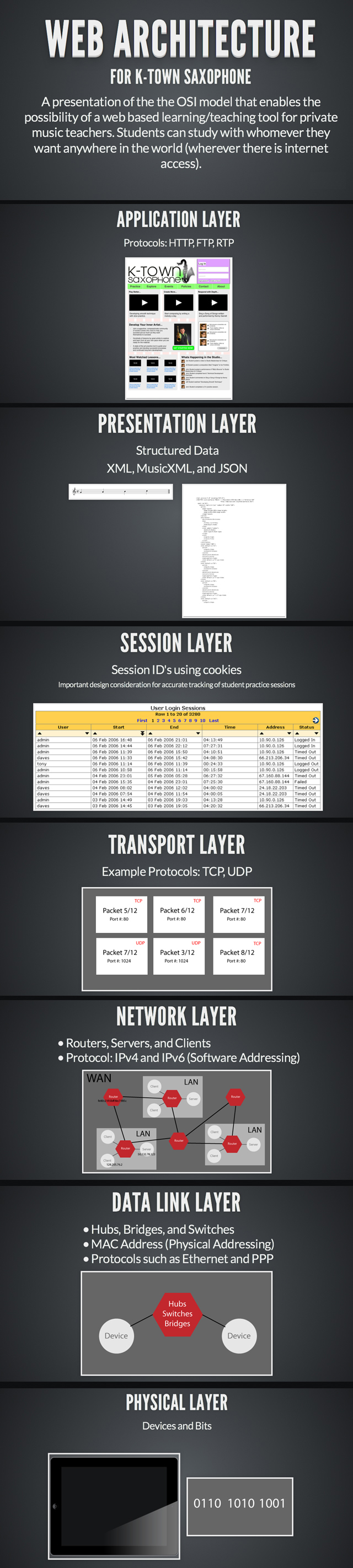

The slide show to the left contemplates the use of the OSI model to guide my thinking as I continue to work on an Web-enabled application that allows for teacher-student on-line music tutoring sessions or student-student music collaboration. The application layer is the top layer in which data is processed for display and used by music teachers and students. Being an in-browser application, the most common protocol at this level is HTTP for sharing data. Another widely used protocol is FTP or file-transfer protocol which allows direct transfer of files (without processing) between two entities. One last common protocol is RTP or Real-time Transport Protocol which is widely used in streaming services.

The application layer also allows for API or Application Programming Interface so that developers can use internal functions of a web service as part of their own applications. For example Google provides API's for all of its products so that its search and other data services can be used by other applications. For this music learning application, API's for several web services including, Spotify, SoundCloud, Google +, and Google Hangouts will all be used. The use of API creates the ability to create really powerful and helpful tools and services for students and teachers alike. The presentation layer provides a structure for data and gives it context to how it relates to other pieces of data. There are several protocols to achieve this but one of the more common ones is XML or Extensible Markup Language. XML allows for a common language so that two different coding systems can convert data to one system to the other. This structured data then can be given to the Application layer for further processing and display. XML is the language that allows for documents to be shared between operating system platforms and multiple applications for example Microsoft Word and Apple Pages or even Google Docs. Music Notation programs are using MusicXML to structure music notation and allows data to be transferred between applications such as Finale, Sibelius, and the online notation editor Noteflight. The presentation slide shows a simple measure of music on the left and what a portion of the MusicXML document of that same measure on the right as an example of how music data is structured in XML. Another example of standardized structuring of data is JSON or JavaScript Object Notation. This gives structure to data via key value pairs as properties of an object but allows for the value of any property to also be an object with its own key:value properties. The session layer provides for authentication of users and also can track certain data about a user such as what kind of device they are using and different activities done during a session. This is most commonly accomplished with programed session ID's using something called cookies. Session ID's allow for multiple request/response connections without having to re-authenticate each time a connection is made. This allows for people to shop an online store with only having to log in once as well as also is a very important part in verifying users in many data sensitive applications such as banking sites. Session ID's can be programmed to expire after a certain amount of time lasting for seconds to years. In the context of this music learning application, the session ID design will be critical in obtaining accurate feedback to teachers and students alike about the amount of time is spent using the application and what is being used most on the application. Since people often practice music in sessions this layer is very analogous to the application, however since session ID's are only concerned with interactions between client and server the expiration time must be balanced with inactivity due to a user still actively using the application but not interacting with it in such a way that does not make server requests such as looking at a screen of music while playing their instrument. A certain level of inactivity though may mean that a student left to a different application or the computer altogether without logging out. To allow a single device to run multiple applications, a transport layer is needed to break down data in order to send them over a network as well as assemble received data coming from a network. The transport layer uses IP addresses from the network layer and adds a port number to the data packet so that a specific application is binder to a particular port. This allows the machine to direct the correct data packets to the correct application. At this layer, data streams get broken down into manageable packets and and ordered. The TCP protocol provides a guarantee for ordered delivery of packets. Other protocols, such as UDP do not guarantee ordered delivery of packets requiring a buffer and reassembling of data. The diagram on the slide then shows data packets from two different applications one using the TCP protocol with its ordered delivery and one using the UDP protocol with no guarantee of ordered delivery. The transport layer also manages flow control so that no bottlenecks occur for data coming from two different paced data transfers. Standard IP addressing allows devices participating through the application to be uniquely identified. Data link reliability is provided on a case-by-case basis for each physical device participating in application use. The Physical Layer is the physical device that turns data into bits which are represented as 0's or 1s. For this music learning tool, devices need to have a touch screen, microphone, and camera such as many of the popular tablet, laptop, and desktop computers have today. Because reading music notation is such an important part of the application, a device could be created that is optimized for this application such as a tablet device that has a screen size and resolution that is equivalent to many laptop or desktop screens but is as thin and light as the tablet computers. This would allow more screen space in the layout which would allow for less "page turns" for many musical examples and also larger sizes for notation symbols for easier reading from further distances such as on a music stand. The device could also have a quality microphone intended for musical instruments as opposed to voice which many devices today are equipped with. If the application will include synchronous communication as well as asynchronous, giving a video camera zoom capability and added event listers to allow for networked control of the camera would be a great design feature as it would allow a teacher/student in a lesson to control the students camera and zoom in or out as needed to view particular things up close such as zooming in on fingers or embouchure. The device should also have a number of USB ports to be able to connect with the array of midi keyboards and devices that are currently available. Ports necessary for technologies at Layer 2 also need to be considered however wireless network connection is ideal for mobility of the user. Letting my imagination go further, I wonder if the screen should be built right into a music stand. However this would make the device less mobile and not as useful in multi-purposeful ways. |